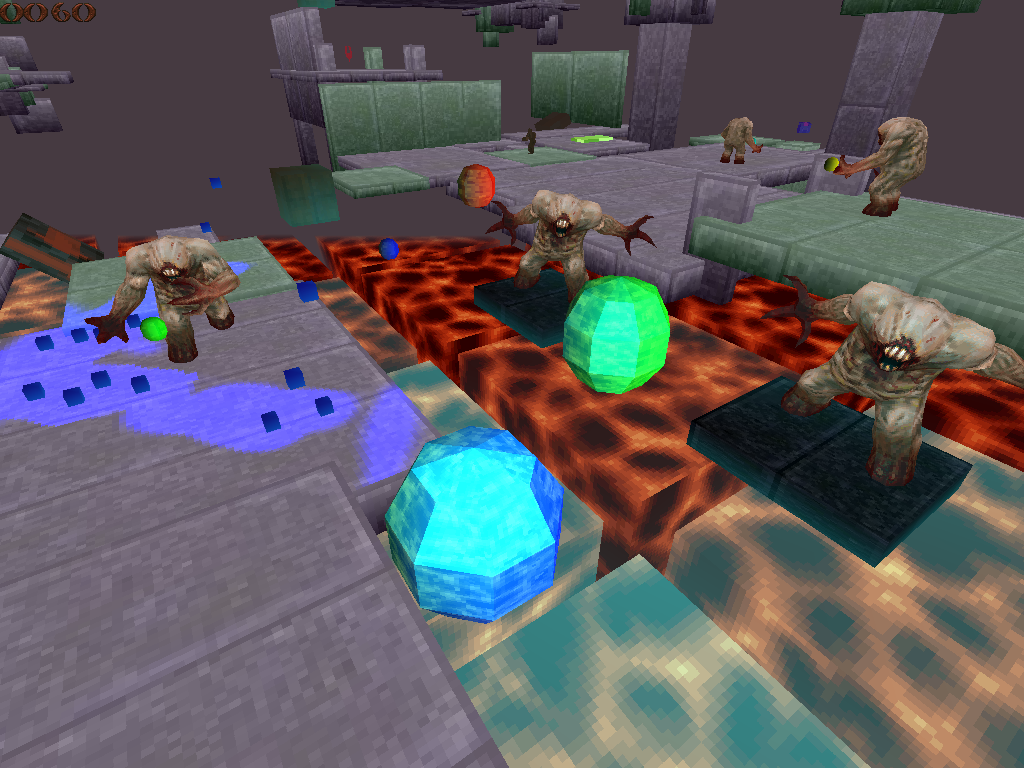

Lamorna engine has a simple but robust collision scheme. Simple, in that it only supports linear collisions against axis aligned surfaces, but robust in that it uses an internal fixed point format to give strong guarantees about precision. The process is multi-threaded, and uses a broad phase check against a bounding volume hierarchy (BVH) to quickly discard non-colliders.

Lamorna engine has a simple but robust collision scheme. Simple, in that it only supports linear collisions against axis aligned surfaces, but robust in that it uses an internal fixed point format to give strong guarantees about precision. The process is multi-threaded, and uses a broad phase check against a bounding volume hierarchy (BVH) to quickly discard non-colliders.

I found collision detection one of the hardest game systems to write, even more so than rendering. Whereas the occasional graphical glitch may be tolerable, collision is unforgiving; pushing through a wall or falling through the floor is a deal breaker for player experience. It wasn’t until switching to a fixed point solution that I was able to completely eliminate the occasional collision failure.

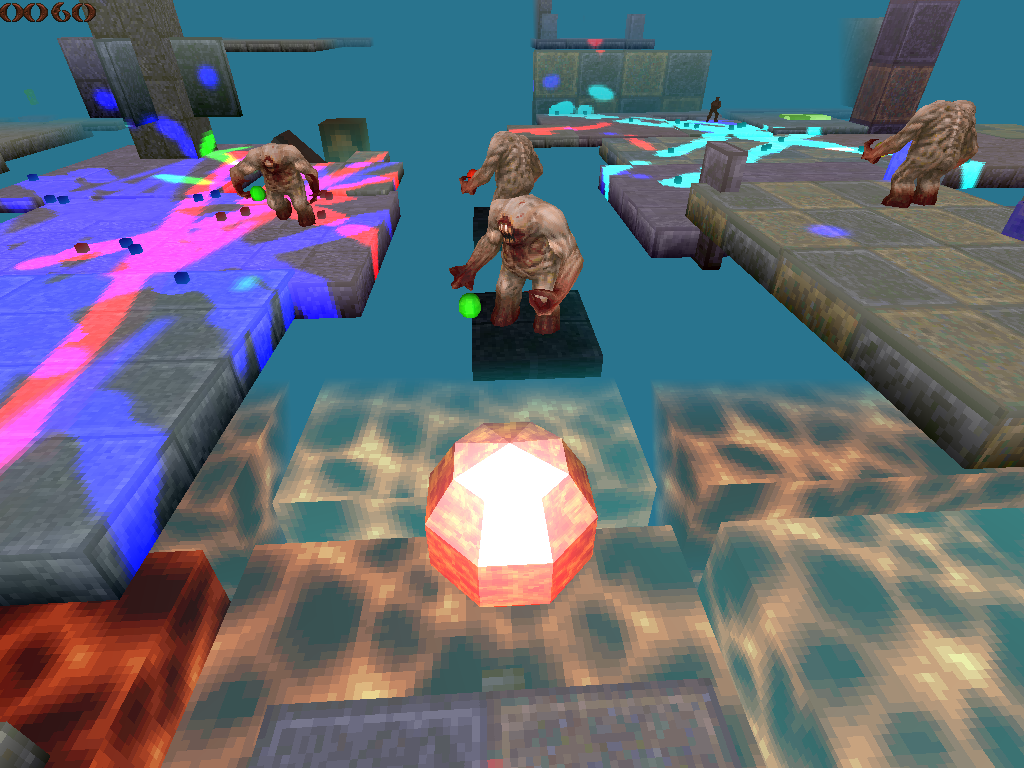

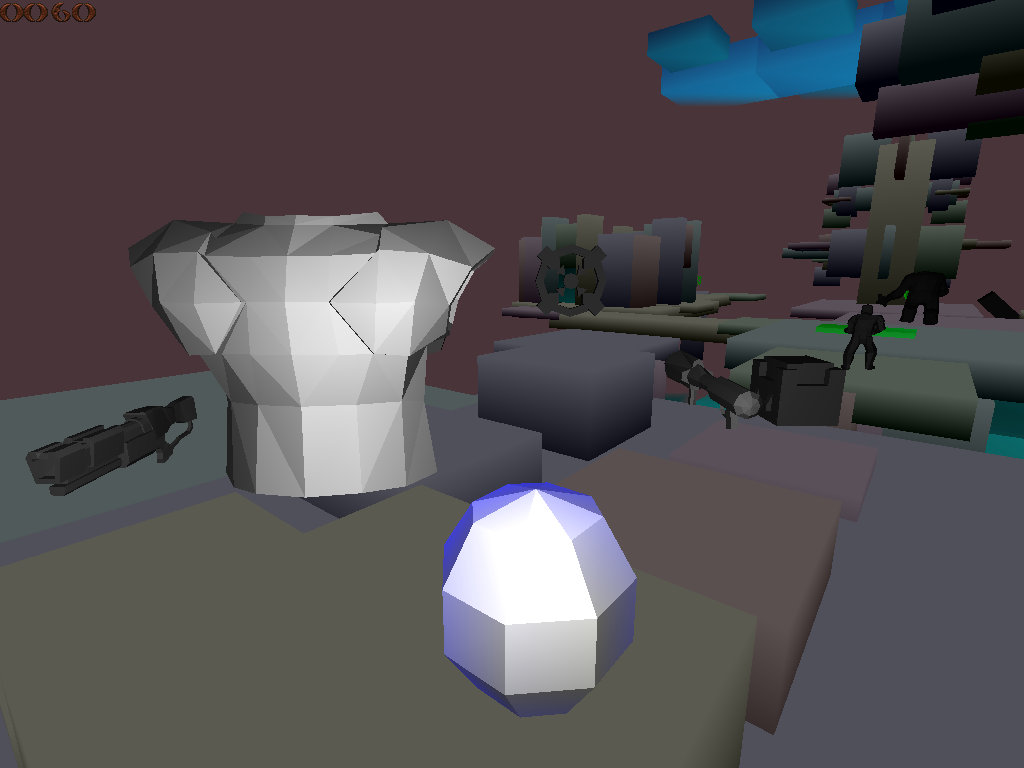

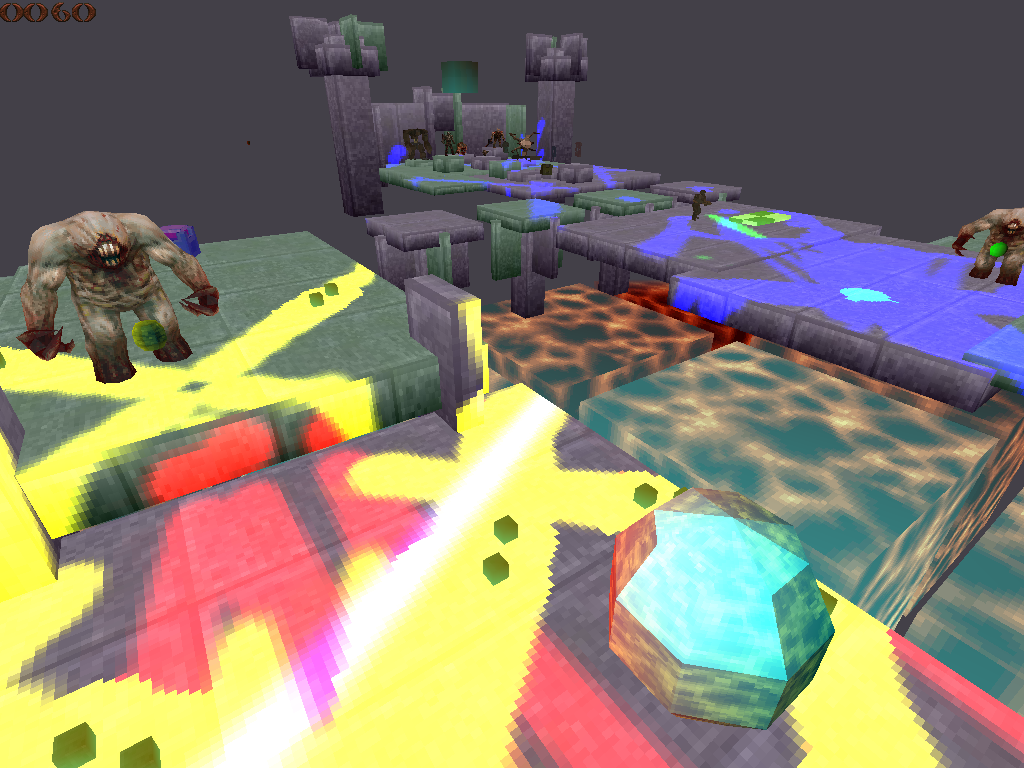

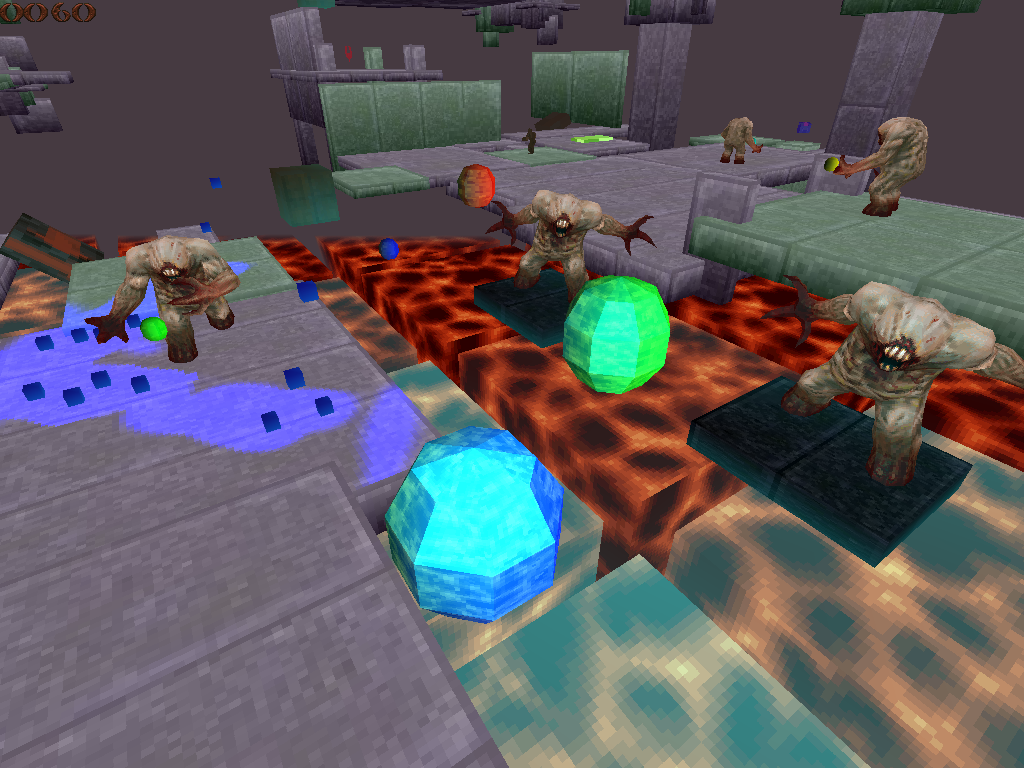

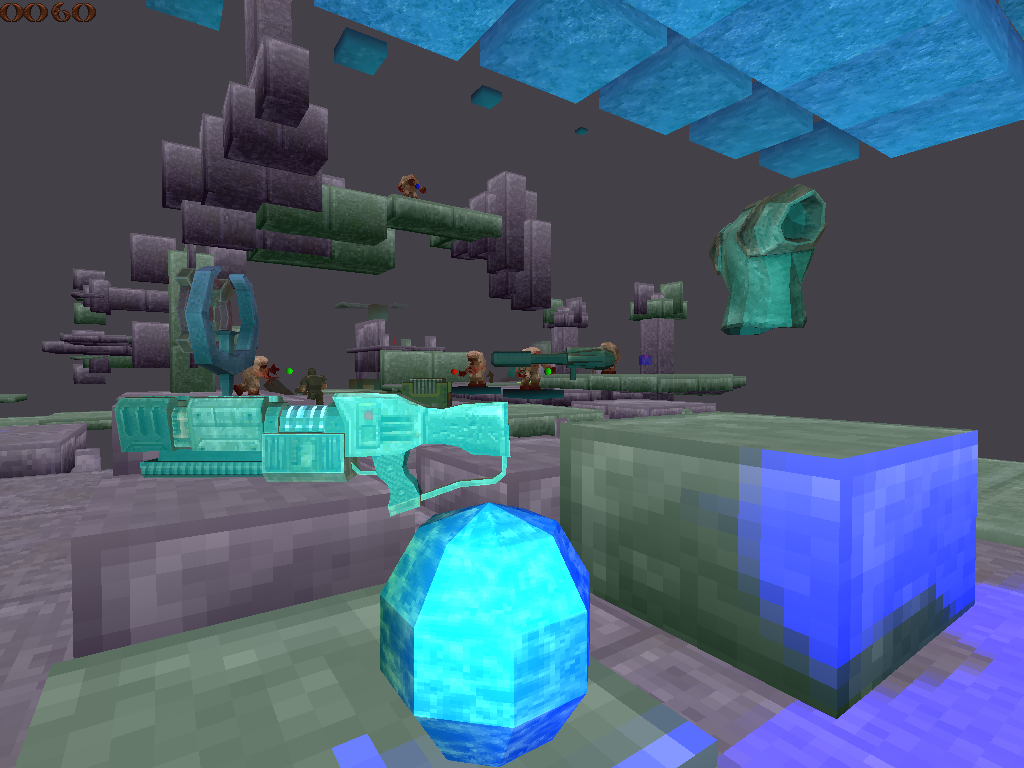

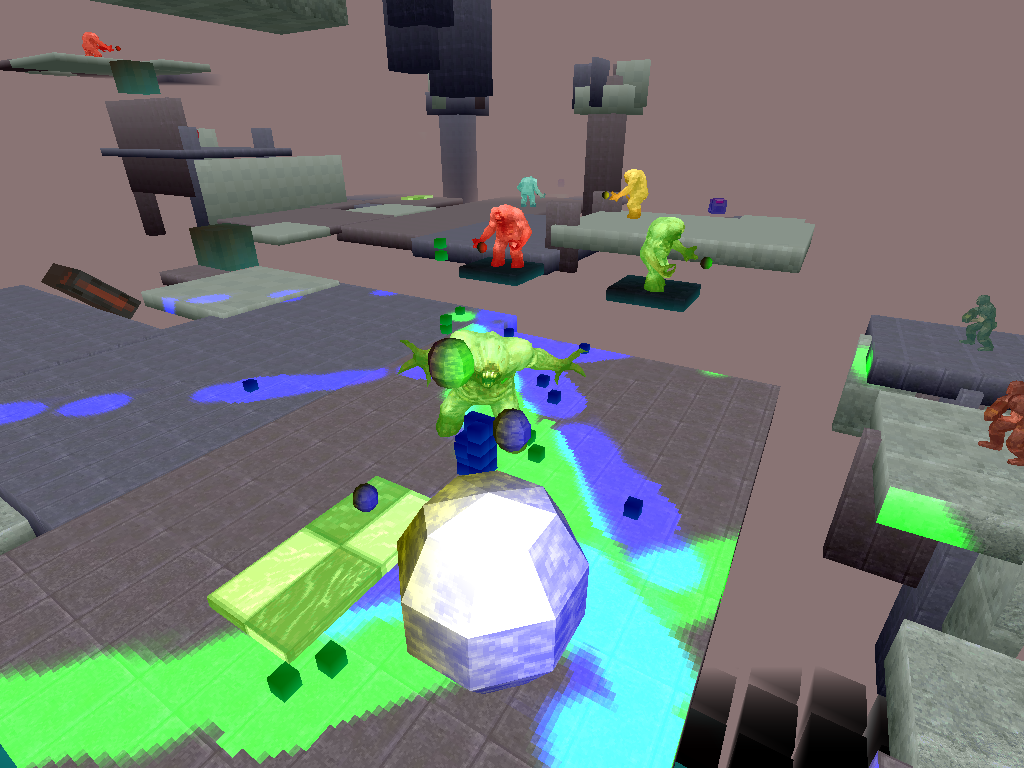

Colliding entities are expressed as axis aligned bounding boxes, with a position and extent. This makes a preliminary overlap test as simple as checking the sum of the extents against the absolute difference between the two positions for each axis. The position and extent are in 16:16 fixed point format, with all calculations being done in 64-bit. The collision system defines colliders and collidees, where colliders are dynamic elements like players, monsters, projectiles and such, and collidees are the mostly static world elements. They are identified in the ECS with respective components. Some of the moving elements are both colliders and collidees, the players and monsters for example. For some elements like the blocks that compose the game world, the bounding box is a direct expression of it’s shape, for other more complex models it is simply an approximation. The collision system’s modus operandi is to take each collider in turn and collide it against each collidee that falls within it’s purview, adjusting the position of the collider as needed, but treating the collidees it encounters as static. This makes for a straightforward scheme, and works perfectly for simple collision encounters such as player vs static world, projectile vs moving entity, but starts to break down when more complicated interactions between two or more moving objects are required.

Pre-process

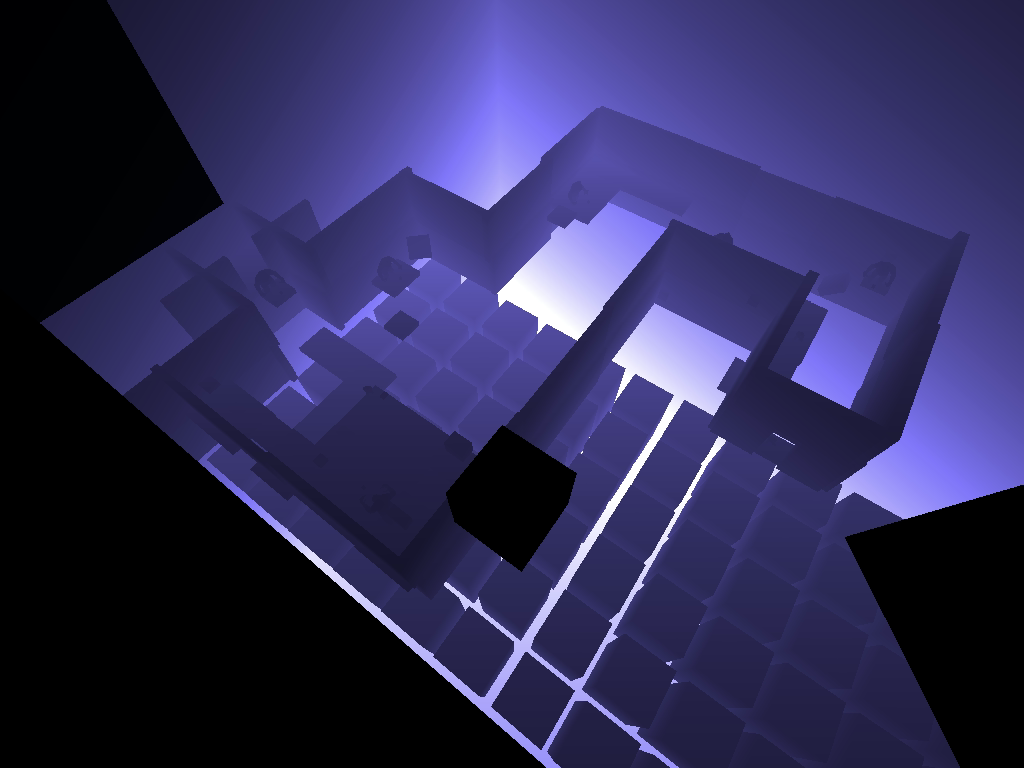

The collison process opens with a pre-step that bins all dynamic collidees into the static collidee BVH. This static BVH was created at setup by binning all static world elements into a regular 3D grid, and then adjusting the bounds of each node of the grid to fully contain it’s set. This loose grid approach creates nodes of differing sizes but means each colliding element is present only once, which I felt was a win over a tight BVH where collidees spanning multiple grid nodes are duplicated across those nodes. There are not nearly as many dynamic collidees needing binning in comparison to static collidees, so the run time binning is not particularly time consuming. The grid nodes are re-adjusted each frame to fit the included dynamic collidees. The entries in the BVH include references back to the source entities in the entity-component system (ECS).

Broad Phase

The next step is the broad phase, which checks each collider against the BVH, using a conservative bounding box that contains the extent of the collider’s potential movement during collision, and outputs a list of potential collidees for narrow phase analysis. We use SSE to process firstly 4 grid nodes at a time, then 4 bounding boxes, and sparse array compression to output the final index lists.

Narrow Phase

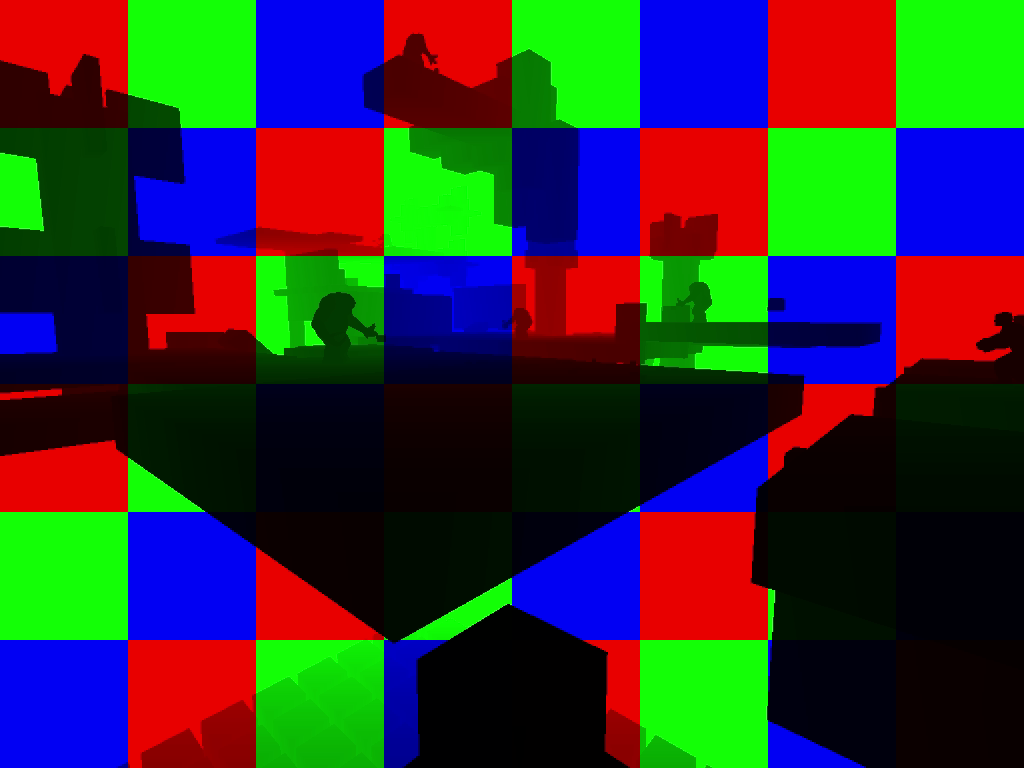

The input into the narrow collison phase is a list of colliders each with an array of potential collidees, those colliders with no potential collidees having been excluded. Each collider has a position, a movement vector and colliding extent, now expressed in fixed point format, and each collidee a position and extent similarly expressed. The narrow phase is iterative, checking the collider against each potential collidee in turn, adjusting it’s position and direction, then re-checking until it’s movement vector is consumed, no more collisions are detected or an upper iterative bound is reached.

Collision checks in the narrow phase are performed by expressing the collider as a point and directed line segment and the collidee as series of axis aligned slabs, with it’s extent expanded by the extent of the collider. Intersection intervals are generated for the line segment against each axis slab, and composited into a final interval for each bounding box, the smallest valid one being the collision interval for the set. The collider’s position is then stepped to the intersection point using this interval. For more details please see Christer Ericson’s excellent text ‘Realtime Collision Detection’, in particular section 5.3.3 ‘Intersecting Ray or Segment Against Box’.

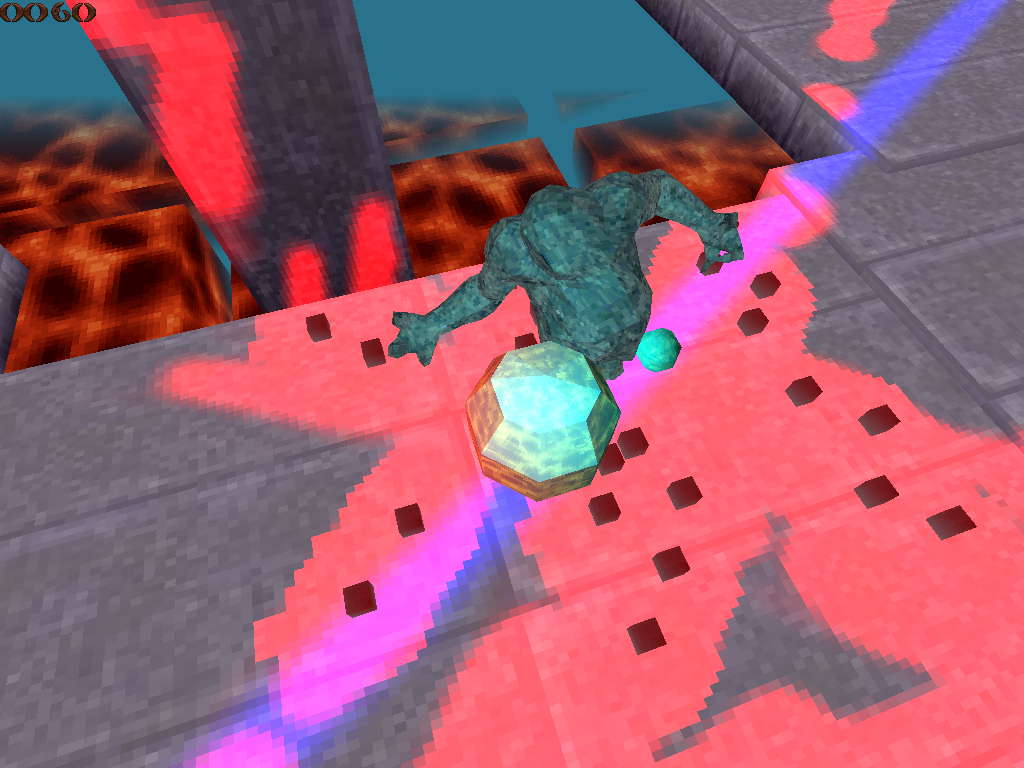

Working with axis aligned boxes means the collider’s position can be clamped to the colliding axis, offset by a small epsilon to push it off the surface, which is a big aid to fidelity. The movement vector of the collider is adjusted, depending on the type of collision response required. For deflection, which yields the sliding behaviour you want for natural moving entities like the player and monsters, the movement vector is set at a tangent to the colliding surface. For reflection or ‘bouncing’, the movement vector is reflected about the surface normal. The collider can also be brought to a stop by the collider, in which case the movement vector is set to zero. The type of collision response for the current collider-collidee pair is obtained from a look up table. The movement vector is expressed as a normal vector and magnitude. I found this approach easier to work with, and more robust. ‘Consuming’ the movement vector as the collision steps proceed becomes simply a case of decrementing the magnitude, and the normal vector can be clamped to an axis without a loss of precision.

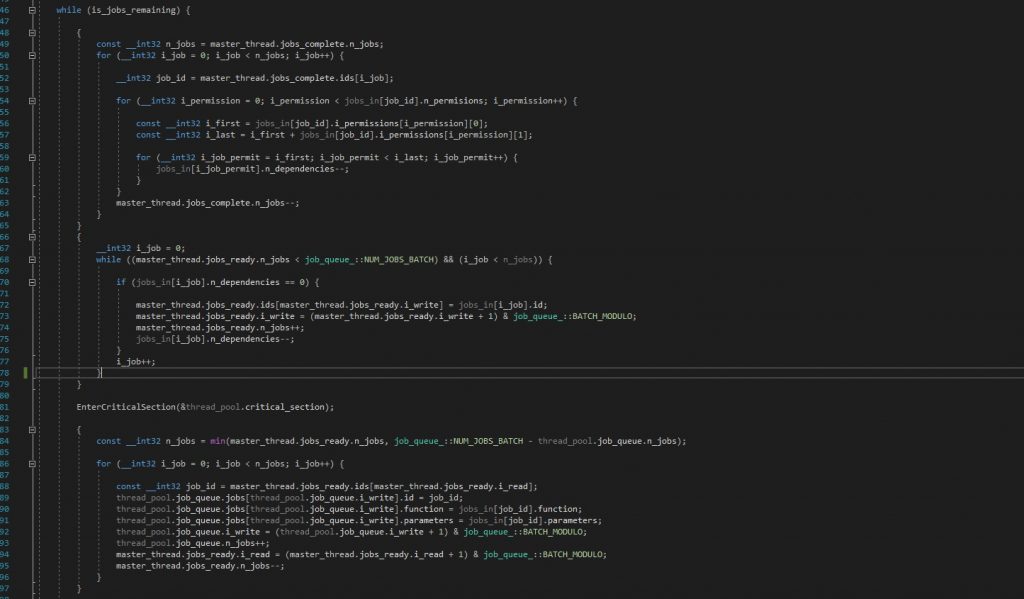

The narrow phase collision process is expressed as a series of jobs fed into the thread pool, where a worker thread is handed a collider and it’s list of potential colliders. The output is a list of valid colliders and collidees with their contact points, which can be parsed by the game logic systems for further collision outcomes.

The only non-axis aligned elements in the game world are the bounce pads, which are treated as an axis aligned box in a coordinate space described by 3 basis vectors that orient the box. Collision proceeds as per usual save colliders are mapped into this coordinate space prior to collision checks. This process hasn’t been as stable as the base one in the past, and I haven’t been confident using oriented surfaces for generalised collisions.

As a final point, some game entities need to be able to step or rise over low obstacles for continuity of motion. This is done by running a second narrow phase collision pass for a slightly elevated copy of the collider, and if that copy can proceed where the original is blocked the entity is allowed to surmount the collidee.

Please check out my previous posts on other game systems.